Many recent approaches in contrastive learning have worked to close the gap between

pretraining on iconic images like ImageNet and pretraining on complex scenes like COCO.

This gap exists largely because commonly used random crop augmentations obtain

semantically inconsistent content in crowded scene images of diverse objects.

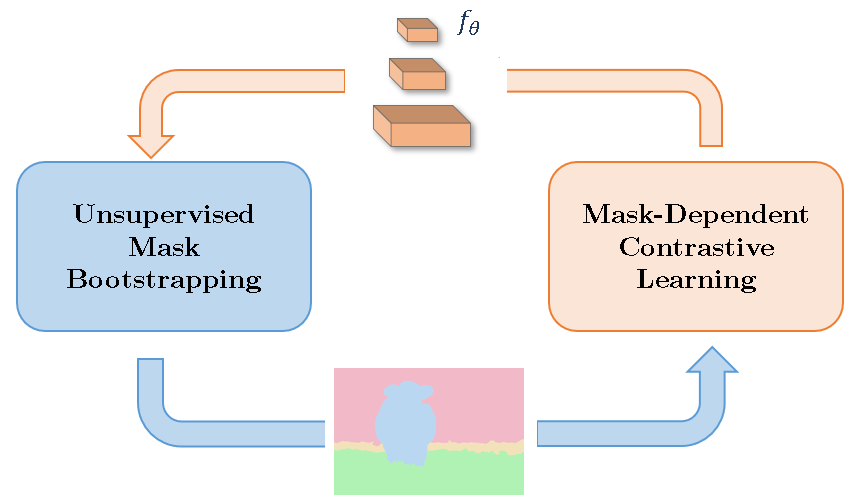

In this work, we propose a framework which tackles this problem via joint learning

of representations and segmentation. We leverage segmentation masks to train a model

with a mask-dependent contrastive loss, and use the partially trained model to

bootstrap better masks. By iterating between these two components, we ground the

contrastive updates in segmentation information, and simultaneously improve

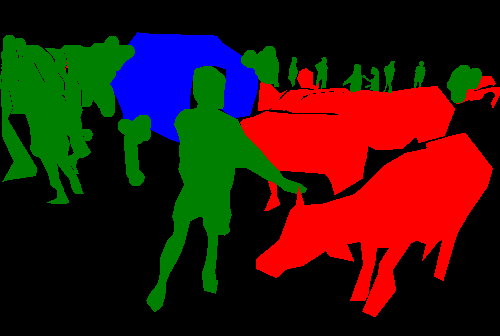

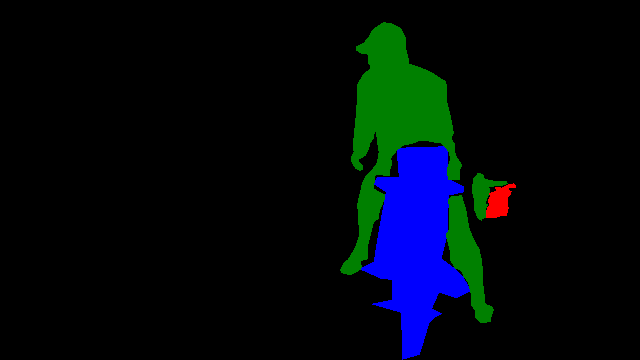

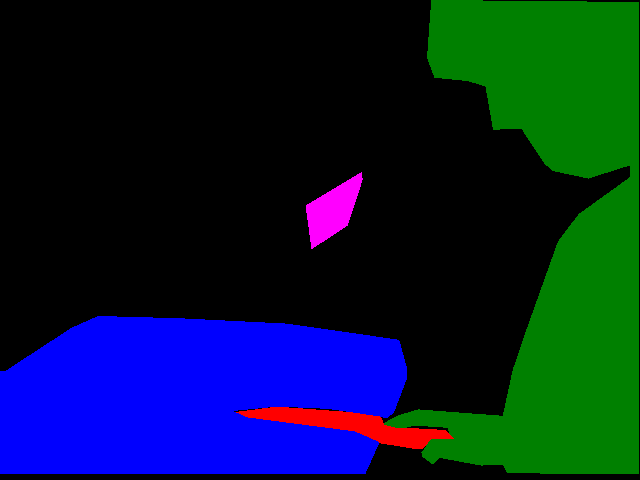

segmentation throughout pretraining. Experiments show our representations transfer

robustly to downstream tasks in classification, detection and segmentation.

We show that notions of objectness emerge naturally within the learned representations

following our bootstrapping process.